NVIDIA Nemotron 3: The New Standard for Open-Source Agentic AI

NVIDIA Nemotron 3: The New Standard for Open-Source Agentic AI

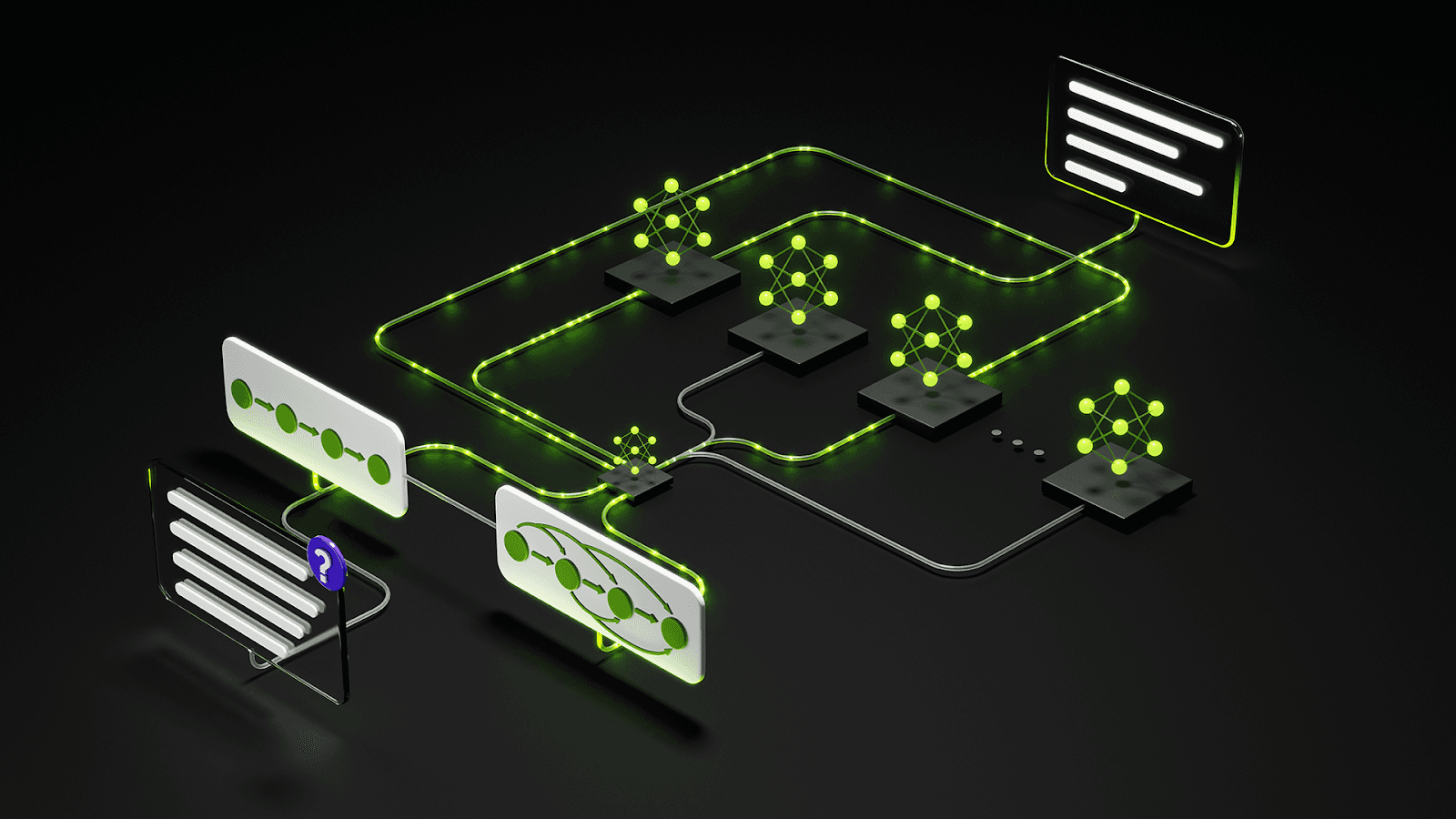

The era of passive chatbots is rapidly evolving into the age of agentic AI—systems that can plan, reason across multiple steps, and take actions by calling tools and APIs. With the release of Nemotron 3, NVIDIA is explicitly targeting that shift: this is a family of open models designed to become the “brain” for reliable agents that operate in real workflows.

This update focuses on what actually matters for builders: model lineup, long-context capabilities, tool-use readiness, deployment paths, and the practical tradeoffs you’ll face when choosing Nemotron 3 for production.

At-a-Glance

| Category | What Nemotron 3 Brings | Why It Matters for Agentic AI |

|---|---|---|

| Model family | Multiple sizes (Nano → Super → Ultra) | Pick the right cost/latency/quality tier for your agent |

| Long context | Up to 1,000,000 tokens context | Lets agents keep long work histories, documents, and plans in-memory |

| Tool readiness | Emphasis on tool use / function calling + safety | Agents that can actually do things (DB queries, scripts, web tasks) |

| Optimization | Built to run efficiently with NVIDIA’s inference stack | Lower latency and better throughput for interactive agents |

| Open availability | Published through NVIDIA catalog + popular model repositories | Easier adoption, fine-tuning, and private deployment options |

Model Lineup and Positioning

Nemotron 3 is presented as a family to cover a wide spread of deployment needs—from cost-sensitive applications to enterprise-grade agent systems.

| Model | Family Positioning | What You’d Use It For |

|---|---|---|

| Nemotron 3 Nano | Efficiency-first, agent-ready baseline | Local/edge prototypes, cost-sensitive services, RAG + tooling agents |

| Nemotron 3 Super | Higher capability tier | Production agents with heavier reasoning needs, broader tool repertoires |

| Nemotron 3 Ultra | Highest tier | Complex enterprise agents, multi-agent orchestration, best-quality runs |

Tip for your editorial angle: present this as a “ladder” — Nano is what most indie teams start with, while Super/Ultra are what enterprises will pay for when accuracy and reliability dominate.

Beyond Chat: What “Agentic” Actually Requires

A model that powers an agent must consistently handle four things:

- Goal decomposition (breaking a task into steps)

- State tracking (remembering decisions, intermediate results, and constraints)

- Tool selection and execution (deciding when to call tools and with which parameters)

- Safety / guardrails (to reduce hallucination-driven actions)

Nemotron 3 is framed to address these agentic requirements—especially around steerability, tool usage, and enterprise safety.

Key Technical Capabilities

1) Long Context: Up to 1M Tokens

Nemotron 3 highlights support for up to 1,000,000 tokens of context. In agent systems, this is not a vanity metric—long context can dramatically simplify design:

- Keep long meeting notes, tickets, or requirements in-context

- Preserve a long-running agent plan + tool-call history

- Run deeper retrieval-augmented generation (RAG) pipelines with fewer chunking compromises

| Design Choice | Short Context World | Long Context World (Nemotron 3) |

|---|---|---|

| RAG chunking | Aggressive chunking + more retrieval calls | Fewer chunks, fewer calls, more global coherence |

| Agent memory | External memory store required early | Can keep more state directly in-context |

| Debuggability | Harder to reproduce past state | Easier to replay long histories and inspect failures |

2) Steerability and Alignment (SteerLM)

NVIDIA positions SteerLM as a way to steer style/behavioral attributes at inference time. For agentic products, steerability is not just “tone control”—it’s a practical tool for:

- Switching between concise execution mode vs explanatory audit mode

- Adapting responses for different roles (support agent vs engineering agent)

- Reducing risk by tightening behavior in production contexts

3) Tool Use and Function Calling

Agentic systems succeed or fail on tool use. Nemotron 3 is explicitly pitched for tool-aware behaviors—identifying when to use a tool, producing structured calls, and integrating tool outputs back into reasoning.

Practical examples where this matters:

- SQL / analytics agents: translate request → query → validate → summarize

- Code agents: run linters/tests and iterate

- Ops agents: call internal APIs with strict schemas and permissions

4) Enterprise Guardrails (NeMo Guardrails Integration)

For real businesses, the question is not “can the model talk?” but “can it act safely?” Nemotron 3 is aligned with NVIDIA’s guardrails ecosystem, supporting patterns like:

- Allowed tools / disallowed tools

- Safety policies for tool calls

- Output validation and refusal behavior

Performance and Efficiency: What NVIDIA Emphasizes

Nemotron 3 is designed to work cleanly with NVIDIA’s inference stack (e.g., TensorRT-LLM). Even if you’re model-agnostic, the key takeaway is the product-level impact:

- Lower latency → better UX for interactive agents

- Higher throughput → lower cost per action

- More predictable performance → fewer production surprises

| Operational Metric | Why You Should Care for Agents |

|---|---|

| Latency (p95/p99) | Agents feel slow if they can’t “think” and act quickly |

| Throughput | Directly impacts cost and concurrency |

| Memory footprint | Dictates which GPUs and which batch sizes are viable |

Practical Applications (Real Agent Use Cases)

Autonomous Coding Agents

Nemotron 3 can serve as a coding agent backbone for:

- Debugging and refactoring files

- Writing tests

- Iterating via tool calls (run tests, parse logs, patch code)

Enterprise Workflow Automation

Example workflows:

- HR: schedule interviews, extract resume data, update ATS via API

- Finance: reconcile invoices, validate rules, generate structured reports

- IT/Support: triage tickets, collect diagnostics, run scripted checks

Data Analysis and Insight Generation

A typical agent loop:

- Parse a request (e.g., “Compare Q3 sales vs marketing spend”)

- Call DB tools (SQL)

- Run analysis scripts

- Generate a final narrative + charts

How to Get Started

Where to Access Nemotron 3

NVIDIA states the models are available via the NVIDIA NGC catalog and on popular model repositories such as Hugging Face.

Paths to Deployment

| Path | Best For | Notes |

|---|---|---|

| Local / private | Privacy-first teams, sensitive data | Run weights in your own environment |

| Private cloud | Scaled internal usage | Combine with guardrails + monitoring |

| Managed access | Fastest integration | Use a managed offering if you don’t want infra |

Fine-Tuning

If you’re building a niche agent (legal, finance, internal IT), plan for:

- Domain fine-tuning (or instruction tuning)

- Tool-call schema tuning

- Safety and refusal tuning

What This Signals About the Market

Nemotron 3 is part of a bigger trend: open, agent-ready foundation models are becoming the default substrate for automation products. NVIDIA’s strategic positioning is clear:

- Not just GPUs and acceleration

- But also a full-stack path: models → tooling → inference → guardrails

For builders, the value is optionality: you can prototype quickly with Nano, then scale up capability tiers as your agent product matures.

Conclusion

Nemotron 3 is a meaningful step toward agentic AI becoming mainstream: long context, tool awareness, and enterprise guardrails are exactly what modern agents require. If your product roadmap includes agents that must plan, act, and remain safe in real systems, Nemotron 3 is positioned as a strong open foundation to evaluate.

Sources

- Nemotron-3 (Official Research Hub) — NVIDIA Research

- NVIDIA Debuts Nemotron-3 Family of Open Models — NVIDIA Newsroom

- NVIDIA Debuts Nemotron 3 Family of Open Models — NVIDIA Korea Blog

- Inside NVIDIA Nemotron-3: Techniques, Tools, and Data That Make It Efficient and Accurate — NVIDIA Developer Blog

- NVIDIA-Nemotron-3-Nano-30B-A3B (Model Card) — Hugging Face